Introduction

Definition of AI

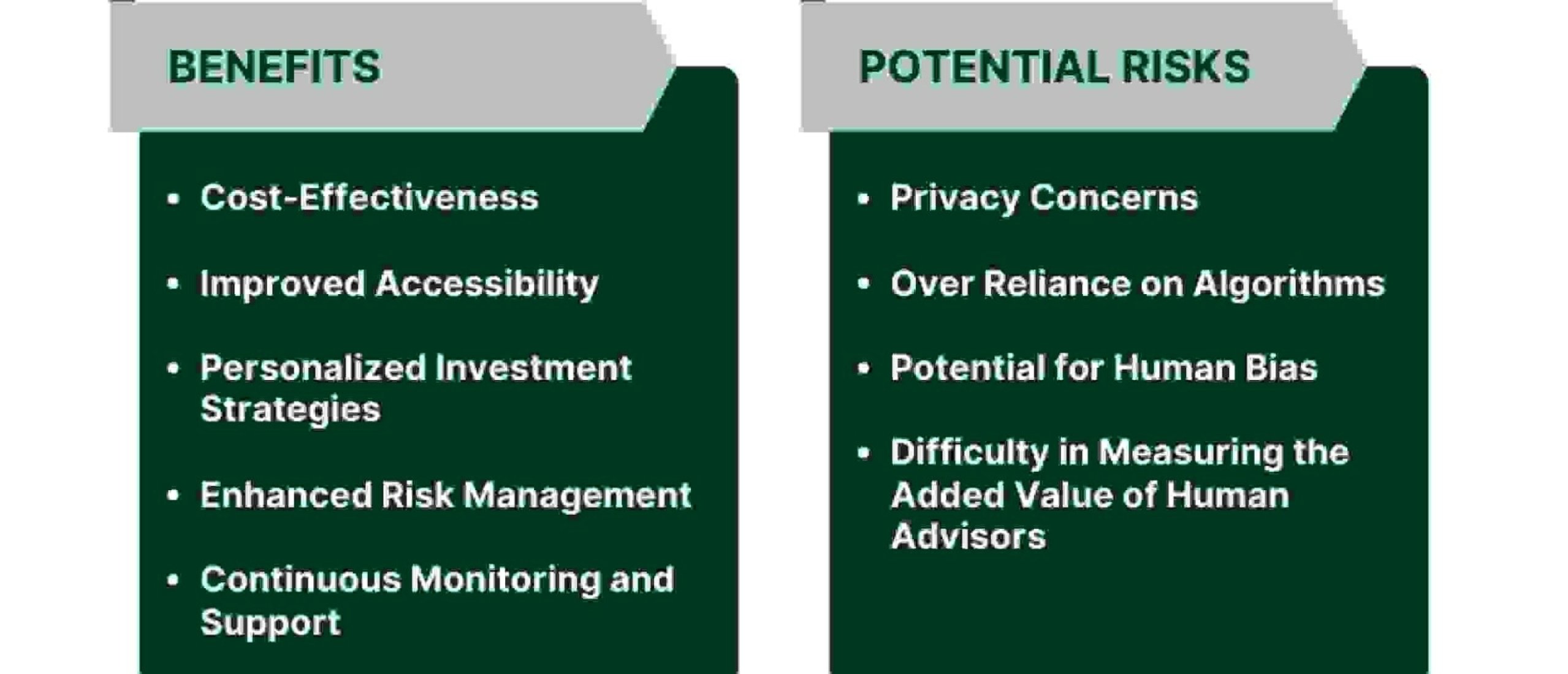

AI, or Artificial Intelligence, refers to the development of computer systems that can perform tasks that would typically require human intelligence. These tasks include speech recognition, decision-making, problem-solving, and learning. AI technology has the potential to revolutionize various industries, such as healthcare, finance, and transportation. However, along with its numerous benefits, there are also risks associated with the use of AI. It is crucial to understand and address these risks to ensure the responsible and ethical development and deployment of AI systems.

Rapid growth of AI

The rapid growth of AI has brought about numerous risks and concerns. As AI technology continues to advance at an unprecedented rate, there is a growing fear of job displacement and economic inequality. The automation of tasks that were once performed by humans has the potential to render many jobs obsolete, leading to unemployment and a widening wealth gap. Additionally, the lack of transparency and accountability in AI algorithms raises ethical concerns, as decisions made by AI systems can have significant societal impact. There is also the risk of bias and discrimination in AI systems, as they are often trained on biased data and can perpetuate existing societal inequalities. Therefore, it is crucial to carefully consider and address these risks to ensure the responsible and ethical use of AI.

Importance of discussing risks

The importance of discussing risks associated with the use of AI cannot be overstated. As AI continues to advance and become more integrated into various aspects of our lives, it is crucial to have open and transparent conversations about the potential dangers and challenges it poses. By openly discussing the risks, we can work towards developing robust safeguards and ethical guidelines to mitigate any negative impacts. Moreover, discussing risks allows us to foster a better understanding of AI's limitations and potential biases, enabling us to use this technology responsibly and ethically. Ultimately, by engaging in these discussions, we can ensure that AI is deployed in a way that benefits society while minimizing potential harm.

Ethical concerns

Bias in AI algorithms

Bias in AI algorithms is a significant concern when it comes to the risks of using AI. AI algorithms are designed to make decisions and predictions based on data, but if the data used to train these algorithms is biased, it can lead to unfair and discriminatory outcomes. For example, if an AI algorithm is trained on data that is predominantly from one demographic group, it may not accurately represent the needs and experiences of other groups. This can result in biased decisions and perpetuate existing inequalities. It is crucial to address bias in AI algorithms to ensure that the technology is used ethically and fairly.

Privacy and data security

Privacy and data security are major concerns when it comes to using AI. With the increasing reliance on AI technologies, there is a growing need to ensure that personal and sensitive information is protected. AI systems often collect and analyze large amounts of data, which can include personal details, browsing history, and even biometric information. This raises questions about how this data is stored, shared, and used. The potential for data breaches and unauthorized access is a significant risk that needs to be addressed. Additionally, there is a concern about the potential misuse of AI-generated insights and predictions, which can have serious implications for individuals' privacy. To mitigate these risks, it is crucial to implement robust security measures, such as encryption, access controls, and regular audits, to safeguard user data and maintain trust in AI systems.

Unemployment and job displacement

Unemployment and job displacement are some of the key risks associated with the use of AI. As AI technology continues to advance and automate various tasks, there is a growing concern that it will lead to a significant reduction in job opportunities. Many jobs that were once performed by humans can now be done more efficiently and effectively by AI systems. This can result in a displacement of workers and an increase in unemployment rates. Additionally, AI systems may also lead to a shift in the skills required for certain jobs, making it necessary for individuals to acquire new skills or risk being left behind in the job market. It is important for policymakers and organizations to address these concerns and develop strategies to mitigate the potential negative impact of AI on employment.

Safety risks

Autonomous weapons

Autonomous weapons, also known as lethal autonomous systems, are a growing concern in the field of AI. These are weapons that can independently select and engage targets without human intervention. The development and deployment of autonomous weapons raise several ethical and moral questions. One of the main concerns is the potential for these weapons to be used in an indiscriminate and disproportionate manner, leading to civilian casualties and violations of international humanitarian law. Additionally, there is a fear that autonomous weapons could lower the threshold for going to war, as they remove the direct human risk associated with military operations. The international community is currently engaged in discussions and debates to address the risks and establish guidelines for the responsible use of autonomous weapons.

Malfunctioning AI systems

Malfunctioning AI systems can pose significant risks in various domains. When AI systems fail to perform as intended, they can make incorrect decisions or provide inaccurate information, leading to serious consequences. For example, in autonomous vehicles, a malfunctioning AI system can result in accidents and injuries. Similarly, in healthcare, if an AI system misdiagnoses a patient or recommends the wrong treatment, it can have detrimental effects on their health. Moreover, malfunctioning AI systems can also contribute to biases and discrimination, as they may be trained on biased data or exhibit biased behavior. Therefore, it is crucial to address the risks associated with malfunctioning AI systems and ensure robust testing, monitoring, and regulation to mitigate these risks.

Lack of human oversight

Lack of human oversight is one of the major risks associated with using AI. As AI systems become more advanced and autonomous, there is a growing concern that humans may lose control over these systems. Without proper human oversight, AI algorithms can make biased or unethical decisions, leading to serious consequences. Additionally, the lack of human involvement in the decision-making process can result in the inability to explain or understand the reasoning behind AI-generated outcomes. This lack of transparency and accountability poses significant challenges in ensuring the responsible and ethical use of AI technology.

Social impact

Inequality and AI

Inequality and AI have become closely intertwined in today's society. As artificial intelligence continues to advance and permeate various industries, it has the potential to exacerbate existing social and economic disparities. One of the main concerns is that AI technologies may disproportionately benefit those who already have access to resources and power, while leaving behind marginalized communities and individuals. This can further widen the gap between the rich and the poor, creating a digital divide that hinders social mobility and perpetuates inequality. Additionally, biases embedded in AI algorithms can reinforce discriminatory practices and amplify existing biases, leading to unfair outcomes. It is crucial to address these issues and ensure that AI is developed and deployed in a way that promotes inclusivity, fairness, and equal opportunities for all.

Manipulation and misinformation

Manipulation and misinformation are significant risks associated with the use of AI. With the increasing sophistication of AI algorithms, there is a growing concern about the potential for AI systems to be manipulated or used to spread false information. AI-powered algorithms can be programmed to generate and disseminate misleading or biased content, leading to the manipulation of public opinion or the spread of misinformation. This can have serious consequences for individuals, organizations, and society as a whole. It is crucial to develop robust safeguards and ethical guidelines to mitigate the risks of manipulation and misinformation in AI systems.

Loss of human connection

Loss of human connection is one of the significant risks associated with the use of AI. As technology continues to advance, there is a growing concern that the reliance on AI systems may lead to a decrease in meaningful human interactions. With AI taking over various tasks and decision-making processes, there is a potential for reduced personal connections and empathy. The human touch, emotions, and understanding that come with face-to-face interactions may be compromised as AI becomes more prevalent in our daily lives. It is crucial to strike a balance between the benefits of AI and the preservation of human connection to ensure a harmonious coexistence between humans and machines.

Legal and regulatory challenges

Liability for AI decisions

Liability for AI decisions is a pressing concern in today's rapidly advancing technological landscape. As artificial intelligence becomes more integrated into various industries and sectors, the question of who is responsible for the decisions made by AI systems becomes increasingly complex. Unlike humans, AI algorithms are not capable of moral reasoning or subjective judgment, which raises important questions about accountability and liability. If an AI system makes a decision that results in harm or damage, who should be held responsible? Should it be the developers who created the algorithm, the organization that deployed the AI system, or the AI system itself? These are the challenging questions that policymakers, legal experts, and technology companies are grappling with as they seek to establish a framework for AI liability. Finding a fair and effective solution is crucial to ensure that the benefits of AI can be realized while minimizing the potential risks and harm associated with its use.

Intellectual property rights

Intellectual property rights are a crucial aspect to consider when it comes to the risks of using AI. With the rapid advancements in AI technology, there is a growing concern about the protection of intellectual property. AI algorithms and models are becoming increasingly sophisticated and valuable, raising questions about who owns and controls the outputs generated by AI systems. Additionally, there is a risk of intellectual property infringement when AI systems are used to replicate or modify existing copyrighted works. It is essential for businesses and individuals to understand and navigate the complexities of intellectual property rights in the context of AI to ensure fair and legal use of AI technologies.

Regulating AI development and deployment

Regulating AI development and deployment is crucial in order to mitigate the risks associated with the use of artificial intelligence. As AI continues to advance and become more integrated into various aspects of our lives, it is important to establish clear guidelines and frameworks to ensure its responsible and ethical use. This includes addressing issues such as data privacy, algorithmic bias, and potential job displacement. By implementing regulations, governments and organizations can help foster trust and accountability in AI systems, while also promoting innovation and societal benefits. Additionally, international collaboration and cooperation are essential in order to create global standards and frameworks for AI regulation, as the impact of AI transcends national boundaries. Overall, regulating AI development and deployment is a necessary step towards harnessing the potential of AI while minimizing its risks.

Mitigating risks

Ethical guidelines for AI

Ethical guidelines for AI play a crucial role in mitigating the risks associated with its use. These guidelines provide a framework for ensuring that AI systems are developed and deployed in a responsible and accountable manner. They address important considerations such as fairness, transparency, privacy, and bias. By adhering to ethical guidelines, we can minimize the potential harm caused by AI and maximize its benefits for society. It is essential for organizations and policymakers to prioritize the development and implementation of robust ethical guidelines to foster trust and confidence in AI technologies.

Transparency and explainability

Transparency and explainability are crucial aspects when it comes to the risks of using AI. As AI systems become more complex and sophisticated, it becomes harder to understand how they make decisions and predictions. Lack of transparency can lead to distrust and skepticism towards AI technologies. Moreover, without explainability, it is challenging to identify and address any biases or errors in AI algorithms. Therefore, ensuring transparency and explainability in AI systems is essential to mitigate the risks and build trust in AI technology.

Collaboration between stakeholders

Collaboration between stakeholders is crucial when it comes to using AI technology. In order to mitigate the risks associated with AI, it is important for different stakeholders, such as government organizations, businesses, and researchers, to work together. By sharing knowledge, resources, and expertise, these stakeholders can collectively address the ethical, legal, and social implications of AI. Collaboration also allows for the development of guidelines and regulations that ensure responsible and safe use of AI. Furthermore, by collaborating, stakeholders can pool their efforts to create AI systems that are unbiased, transparent, and accountable. Ultimately, collaboration between stakeholders is essential for harnessing the potential of AI while minimizing its risks.